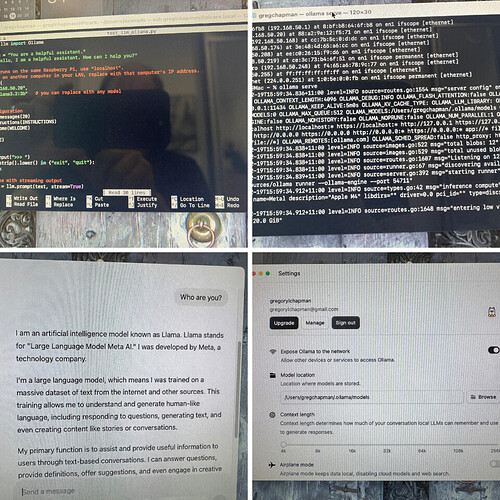

I’ve managed to get everything working on my Picar X up until Section 17 - Ollama

When I try the 2. Test Ollama section , I get this error:

Hello, I am a helpful assistant. How can I help you?

>>> hello

Traceback (most recent call last):

File “/usr/lib/python3/dist-packages/urllib3/connection.py”, line 198, in _new_conn

sock = connection.create_connection(

(self.\_dns_host, self.port),

...<2 lines>...

socket_options=self.socket_options,

)

File “/usr/lib/python3/dist-packages/urllib3/util/connection.py”, line 85, in create_connection

raise err

File “/usr/lib/python3/dist-packages/urllib3/util/connection.py”, line 73, in create_connection

sock.connect**(sa)**

\~\~\~\~\~\~\~\~\~\~\~\~**^^^^**

ConnectionRefusedError: [Errno 111] Connection refused

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File “/usr/lib/python3/dist-packages/urllib3/connectionpool.py”, line 787, in urlopen

response = self.\_make_request(

conn,

...<10 lines>...

\*\*response_kw,

)

File “/usr/lib/python3/dist-packages/urllib3/connectionpool.py”, line 493, in _make_request

conn.request**(**

\~\~\~\~\~\~\~\~\~\~\~\~**^**

**method,**

**^^^^^^^**

...<6 lines>...

**enforce_content_length=enforce_content_length,**

**^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^**

**)**

**^**

File “/usr/lib/python3/dist-packages/urllib3/connection.py”, line 445, in request

self.endheaders**()**

\~\~\~\~\~\~\~\~\~\~\~\~\~\~\~**^^**

File “/usr/lib/python3.13/http/client.py”, line 1333, in endheaders

self.\_send_output**(message_body, encode_chunked=encode_chunked)**

\~\~\~\~\~\~\~\~\~\~\~\~\~\~\~\~\~**^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^**

File “/usr/lib/python3.13/http/client.py”, line 1093, in _send_output

self.send**(msg)**

\~\~\~\~\~\~\~\~\~**^^^^^**

File “/usr/lib/python3.13/http/client.py”, line 1037, in send

self.connect**()**

\~\~\~\~\~\~\~\~\~\~\~\~**^^**

File “/usr/lib/python3/dist-packages/urllib3/connection.py”, line 276, in connect

self.sock = self.\_new_conn**()**

\~\~\~\~\~\~\~\~\~\~\~\~\~\~**^^**

File “/usr/lib/python3/dist-packages/urllib3/connection.py”, line 213, in _new_conn

raise NewConnectionError(

self, f"Failed to establish a new connection: {e}"

) from e

urllib3.exceptions.NewConnectionError: <urllib3.connection.HTTPConnection object at 0x7f7006dbe0>: Failed to establish a new connection: [Errno 111] Connection refused

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File “/usr/lib/python3/dist-packages/requests/adapters.py”, line 667, in send

resp = conn.urlopen(

method=request.method,

...<9 lines>...

chunked=chunked,

)

File “/usr/lib/python3/dist-packages/urllib3/connectionpool.py”, line 841, in urlopen

retries = retries.increment(

method, url, error=new_e, \_pool=self, \_stacktrace=sys.exc_info()\[2\]

)

File “/usr/lib/python3/dist-packages/urllib3/util/retry.py”, line 519, in increment

**raise MaxRetryError(\_pool, url, reason) from reason** # type: ignore\[arg-type\]

**^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^**

urllib3.exceptions.MaxRetryError: HTTPConnectionPool(host=‘192.168.50.20’, port=11434): Max retries exceeded with url: /api/chat (Caused by NewConnectionError(‘<urllib3.connection.HTTPConnection object at 0x7f7006dbe0>: Failed to establish a new connection: [Errno 111] Connection refused’))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “/home/greglchapman/picar-x/example/17.text_vision_talk.py”, line 48, in

response = llm.prompt(input_text, stream=True, image_path=img_path)

File “/usr/local/lib/python3.13/dist-packages/sunfounder_voice_assistant/llm/llm.py”, line 236, in prompt

response = self.chat(stream, \*\*kwargs)

File “/usr/local/lib/python3.13/dist-packages/sunfounder_voice_assistant/llm/llm.py”, line 199, in chat

response = requests.post(self.url, headers=headers, data=json.dumps(data), stream=stream)

File “/usr/lib/python3/dist-packages/requests/api.py”, line 115, in post

return request("post", url, data=data, json=json, \*\*kwargs)

File “/usr/lib/python3/dist-packages/requests/api.py”, line 59, in request

return session.request**(method=method, url=url, \*\*kwargs)**

\~\~\~\~\~\~\~\~\~\~\~\~\~\~\~**^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^**

File “/usr/lib/python3/dist-packages/requests/sessions.py”, line 589, in request

resp = self.send(prep, \*\*send_kwargs)

File “/usr/lib/python3/dist-packages/requests/sessions.py”, line 703, in send

r = adapter.send(request, \*\*kwargs)

File “/usr/lib/python3/dist-packages/requests/adapters.py”, line 700, in send

raise ConnectionError(e, request=request)

requests.exceptions.ConnectionError: HTTPConnectionPool(host=‘192.168.50.20’, port=11434): Max retries exceeded with url: /api/chat (Caused by NewConnectionError(‘<urllib3.connection.HTTPConnection object at 0x7f7006dbe0>: Failed to establish a new connection: [Errno 111] Connection refused’))

What’s going on?