Not really. More memory will always be better, but depending on how far you want to push the boundaries, 2G might be enough, or 16G not enough.

I run on a 4G rpi4. As I pushed it more, yes, I ran out of memory, even when using a few swap tricks

sudo apt install zram-tools

sudo dphys-swapfile swapoff

##Edit to increase swap as needed. I used 8g

sudo nano /etc/dphys-swapfile

#AND SAME FOR this I used 8g

sudo nano /sbin/dphys-swapfile

sudo dphys-swapfile setup

sudo dphys-swapfile swapon

sudo reboot now

but it was also then being throttled as it was running hotter. I never bothered to work out which was slowing it down more.

In the end for intensive work I decided to keep pidog running on my 4G rpi4 and use a distributed architecture across my network, as well as across all 4 rpi processors distributed via ROS2 rather than a single program thrashing one processor to death!

Thereby the lightweight stuff runs on the pidogs rpi4 directly and the intensive stuff on an external computer.

So I never need to worry about the pidogs limits, memory, thermal or otherwise.

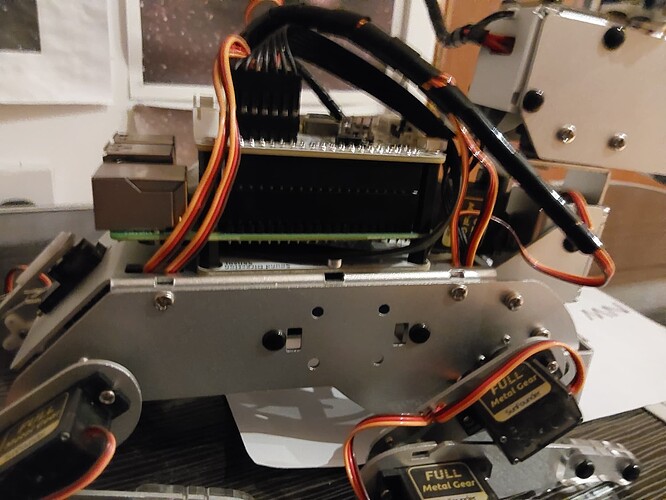

I also added an extra riser to allow better airflow.

This was what I went for in the end but other strategies are available! And I’m sure you’ll get a plethora of competing answers!

If I was to choose today, I like the new servos and power supply of the new V2 but run it with a pi4 on a distributed network IF I wanted to do intensive stuff. For simpler stuff I’d stick with the V1 + pi4 mainly due to cost.

This is 100% pure opinion, not fact based.

The following also ties in with my own experiences